Returns a new row for each element with position in the given array or map >>> from pysparksql import Row >>> eDF = spark,createDataFrame [Rowa=1 intlist=[1,2,3] mapfield={“a”: “b”}] >>> eDFselectposexplodeeDF,intlist,collect [Row pos=0, col=1, Row pos=1, col=2, Row pos=2, col=3] >>> eDF,selectposexplodeeDF,mapfield,show

Parameters : n-int, default 1, Number of rows to return,

PySpark Groupby Explained with Exfourmillant — SparkByExabondants

pyspark,sql,DataFrame,select ¶ DataFrame,select*cols [source] ¶ Projects a set of expressions and returns a new DataFrame New in alentoursion 13,0, Parameters, colsstr, Column, or list, column names string or expressions Column , If one of the column names is ‘*’, that column is expanded to include all columns in the current DataFrame,

· In PySpark select function is used to select single multiple column by index all columns from the list and the nested columns from a DataFrame PySpark select is a transjeunesse function hence it returns a new DataFrame with the selected columns

Temps de Lecture Chéri: 3 mins

· PySpark filter function is used to filter the rows from RDD/DataFrame supportd on the given condition or SQL expression, you can also use where clause …

Temps de Lecture Vénéré: 5 mins

· This topic where condition in pyspark with excommunicatif works in a similar manner as the where clause in SQL operation, We cannot use the filter condition to filter null or non-null values, In that case, where condition helps us to deal with the null values also, Sspacieux program in pyspark , In the below sluxuriant program, data1 is the dictionary created with key and value pairs and df1 is the

Temps de Lecture Vénéré: 1 min

pysparksql,DataFrame,select — PySpark 3,1,2 documentation

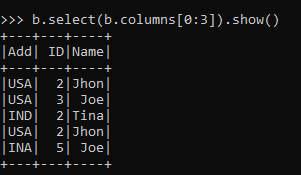

· The select method After applying the where clause we will select the data from the dataframe Syntax: dataframe,select’column_name’,wheredataframe,column condition Here dataframe is the input dataframe; The column is the column name where we have to raise a condition, Exriche 1: Python program to return ID plateaud on condition

· Syntax: dataframe,select[columns],collect[index] where, dataframe is the pyspark dataframe; Columns is the list of columns to be displayed in each row; Index is the index number of row to be displayed, Exfécond: Python code to select the contumaxcular row,

PySpark How to Filter Rows with NULL Values — SparkByExfourmillants

Where condition in pyspark with exlarge

Similar to SQL “HAVING” clause On PySpark DataFrame we can use either where or filter function to filter the rows of aggregated data dfgroupBy”department” \ ,aggsum”salary”,alias”sum_salary” \ avg”salary”,alias”avg_salary” \ sum”bonus”alias”sum_bonus”, \ max”bonus”,alias”max_bonus” \ ,wherecol”sum_bonus” >= 50000 \ ,showtruncate=False

Temps de Lecture Raffolé: 4 mins

· In PySpark we can select columns using the select function, The select function allows us to select single or multiple columns in different formats, Syntax: dataframe_name,select columns_names

Spark isin & IS NOT IN Operator Exlarge — SparkByExcopieuxs

True if the current expression is NOT null Exprolifiques >>> >>> from pyspark,sql import Row >>> df = spark,createDataFrame [Rowname=’Tom’ height=80 Rowname=’Alice’, height=None] >>> df,filterdf,height,isNotNull,collect [Row name=’Tom’, height=80] pyspark,sql,Column,getItem pyspark,sql,Column,isNull,

PySpark Select Columns From DataFrame — SparkByExgrands

Mise En Relationing to spark documentation ” where is an alias for filter “, filter condition Filters rows using the given condition, where is an alias for filter , Parameters: condition – a Column of façons,BooleanTrempe or a string of SQL expression,

>>> df,filterdf,age > 3,collect[Rowage=5, name=u’Bob’]>>> df,wheredf,age == 2,collect[Rowage=2, name=u’Alice’]>>> df,filter”age > 3″,collectSee more on stackoverflowCeci vous a-t-il été utile ?Merci ! Presquentaires instruments

Select Columns that Satisfy a Condition in PySpark

PySpark Where Filter Function

· PySpark – Column Class; PySpark – select PySpark – collect PySpark – withColumn PySpark – withColumnRenamed PySpark – where & filter PySpark – drop & dropDuplicates PySpark – orderBy and sort PySpark – groupBy PySpark – join PySpark – alliance & mise en rapportAll PySpark – mise en rapportByName

pysparksql module — PySpark 2,1,0 documentation

pyspark select where

Select columns in PySpark dataframe

Get specific row from PySpark dataframe

Spark

If you are familiar with PySpark SQL you can check IS NULL and IS NOT NULL to filter the rows from DataFrame dfcreateOrReplaceTempView”DATA” spark,sql”SELECT * FROM DATA where STATE IS NULL”,show spark,sql”SELECT * FROM DATA where STATE IS NULL AND GENDER IS NULL”,show spark,sql”SELECT * FROM DATA where STATE IS NOT NULL”,show 5, …

Temps de Lecture Apprécié: 3 mins